In inexact line search (a numerical optimisation technique) the step length (or learning rate) must be decided. In connection to that the Wolfe conditions are central. Here I will give an example showing why they are useful. More on this topic can be read elsewhere, e.g. in the book of Nocedal and Wright (2006), “Numerical Optimization”, Springer.

Wolfe conditions

The Wolfe conditions consists of the sufficient decrease condition (SDC) and curvature condition (CC):

with .

The strong Wolfe conditions are:

with .

Example

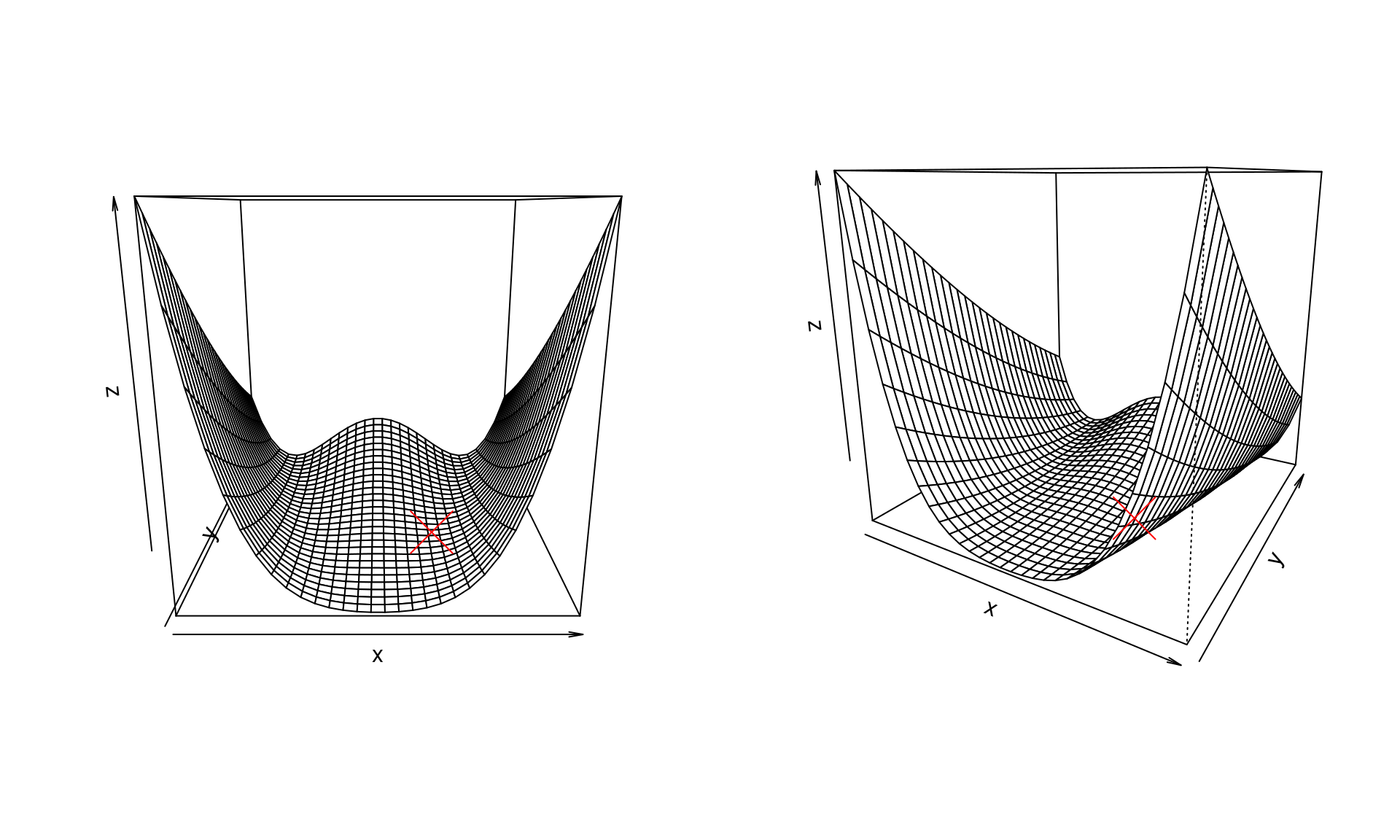

We use the Rosenbrock function to illustrate these conditions when choosing step length. We use a classic version, namely Its global minimum is at and the gradient is

A few illustrations of the function (the global minimum marked with a red cross):

Let and , and further for easier notation. The line search is then for direction and step length . In determining the step length the function is often used. It tells us the value of the function we are minimising for any given step length . Recall that the current position is and the direction is chosen. The only thing left to choose is . So to make that more clear, we refer to instead of .

The aim is that , and if the direction chosen is a descent direction (e.g. the negative gradient), then for sufficiently small this will be possible. But just using as a criteria is not sufficient to ensure convergence, which is why the Wolfe conditions are used.

Now, say we start the line search at then the gradient at that point is

Say we to a line search from in the direction of the negative gradient given by

In we have that

Note that for then so instead smaller values of are tried.

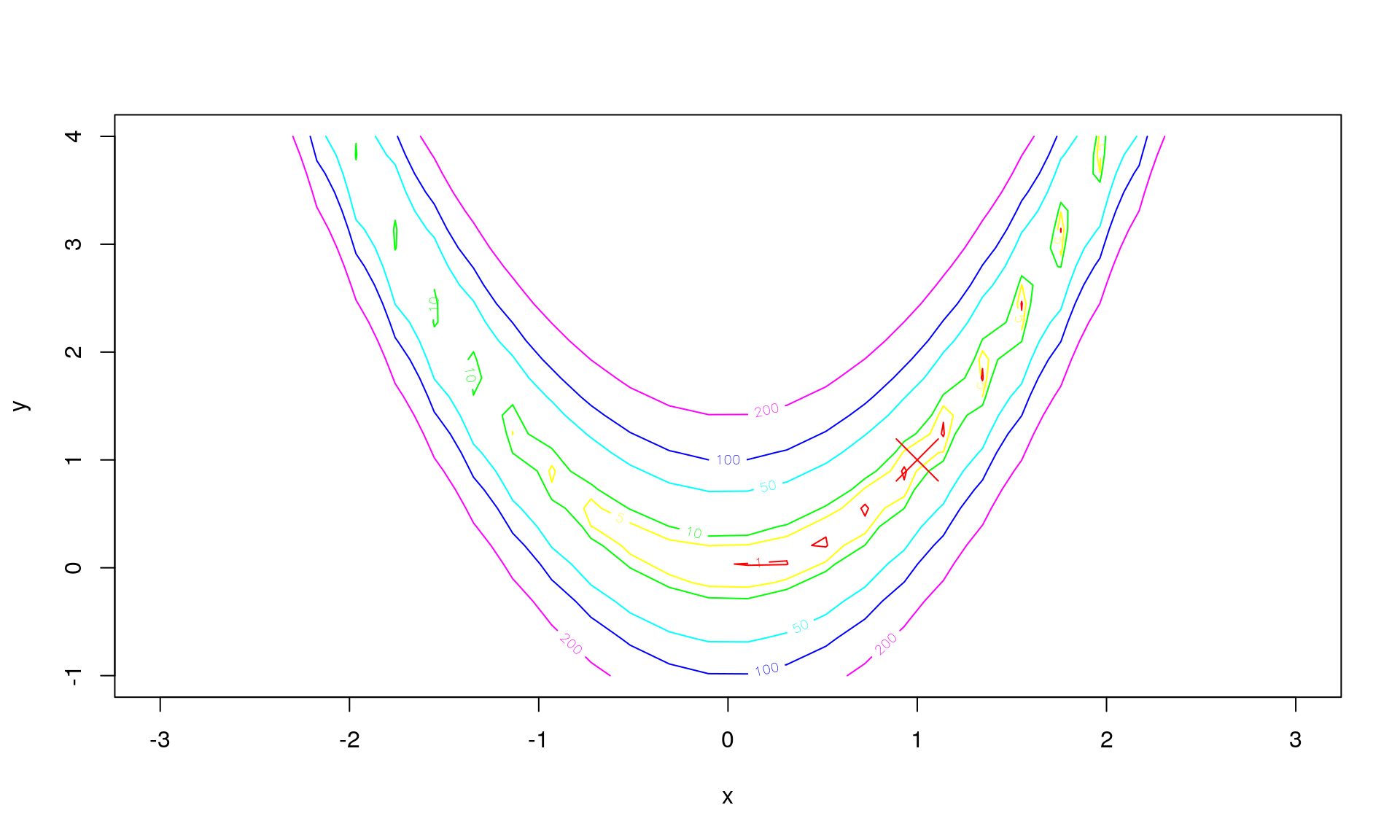

The path for between and (chosen to make this example illustrative) is then:

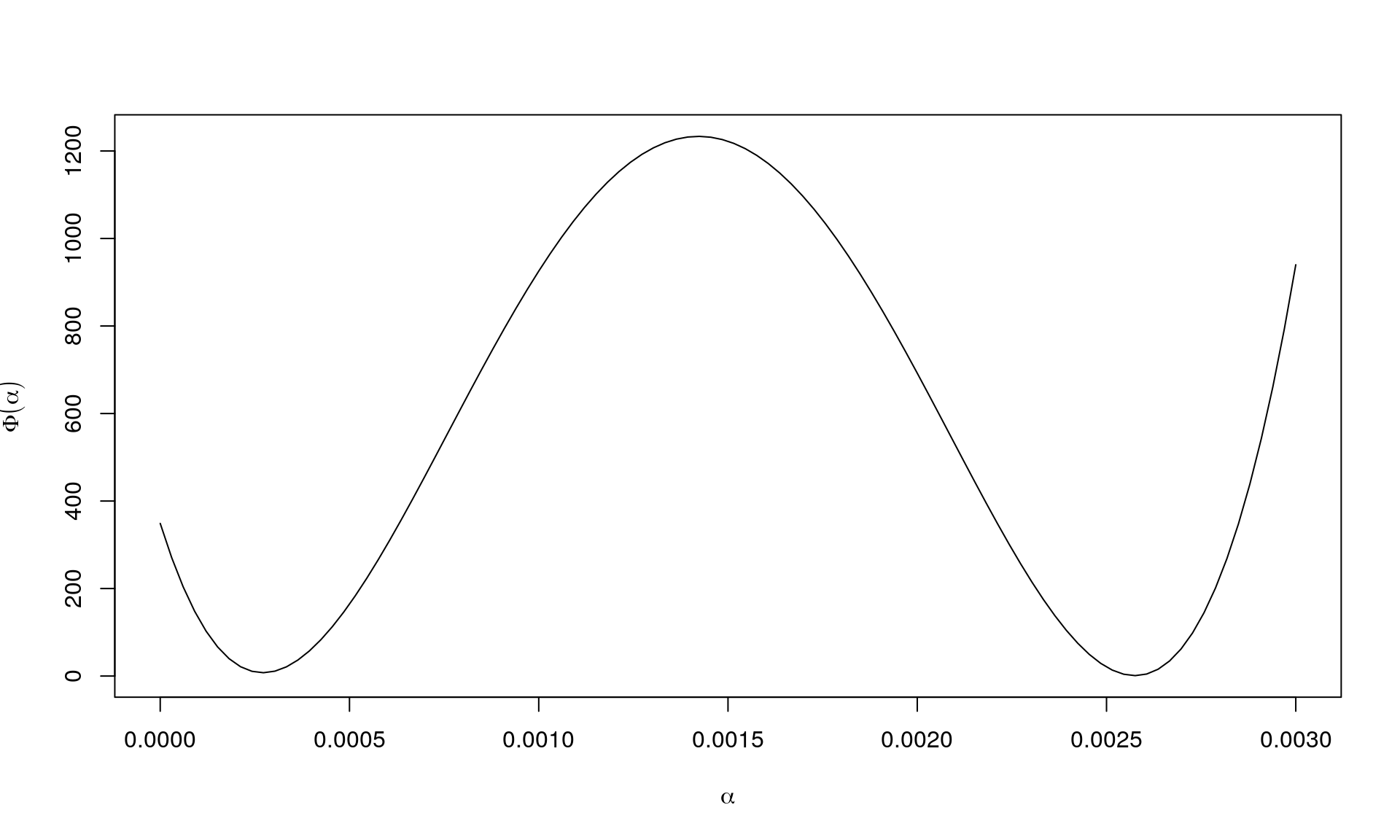

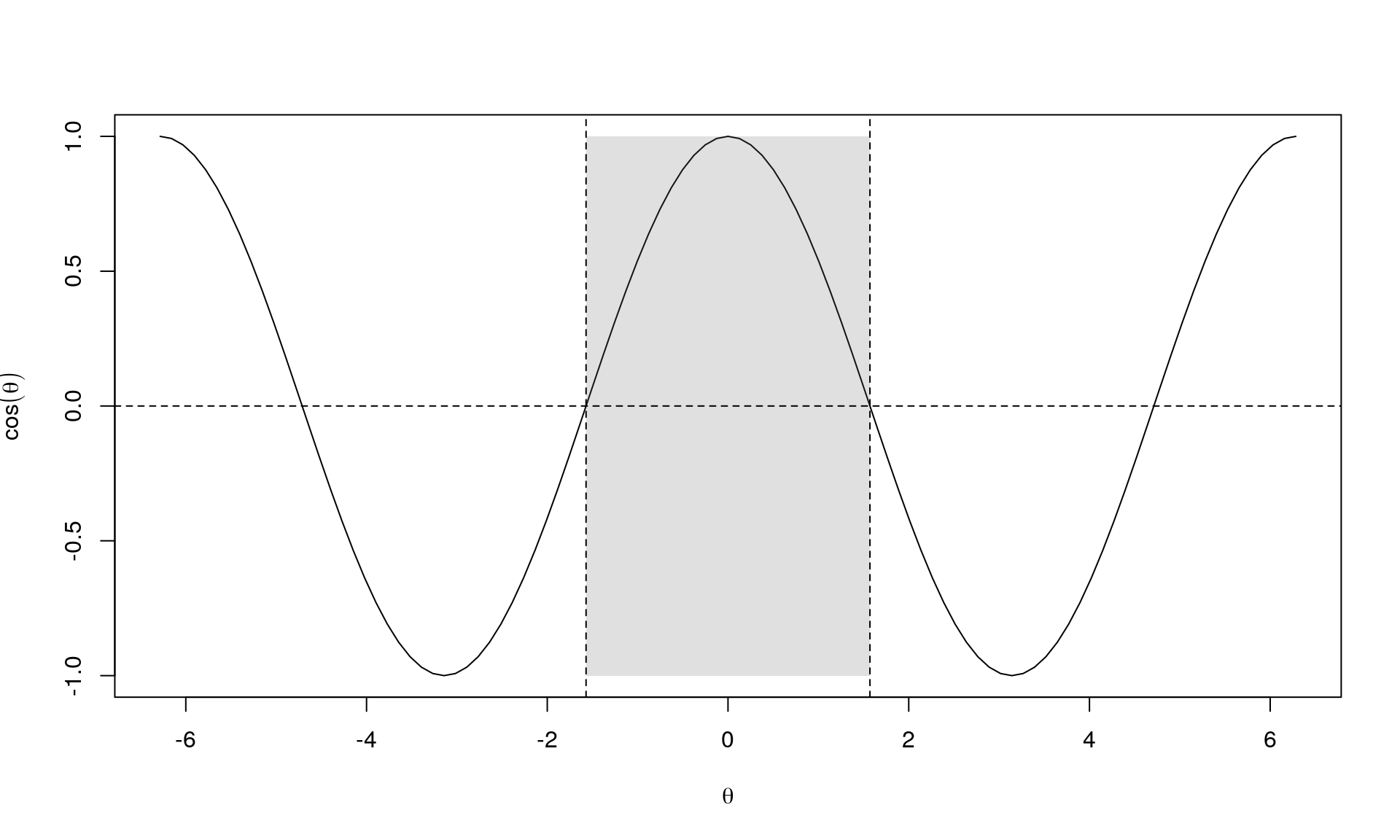

This can instead be shown by visualising instead as this is a univariate function, and can be difficult/impossible to visualise directly as in this example. For this example, looks like this:

As seen, if is sufficiently small, then we move to a position with a smaller value for . But we can also end up in a place with a higher value (although we are moving in the descent direction).

The sufficient decrease condition

We now consider the Wolfe conditions. The SDC (sufficient decrease condition) is with . Let us disect this condition.

First, we focus on . Note that (the latter equality in an Euclidian setting). What do we know about ? We require that is a descent direction (“point in the same way as the negative gradient, ”) and thus the angle between and the negative gradient, , is .

Instead of the negative gradient, we consider the gradient. So multiplying with means that .

Further, is a value in . And as , and we have that . In other words, the condition can be written as where and . So a stright line with negative slope.

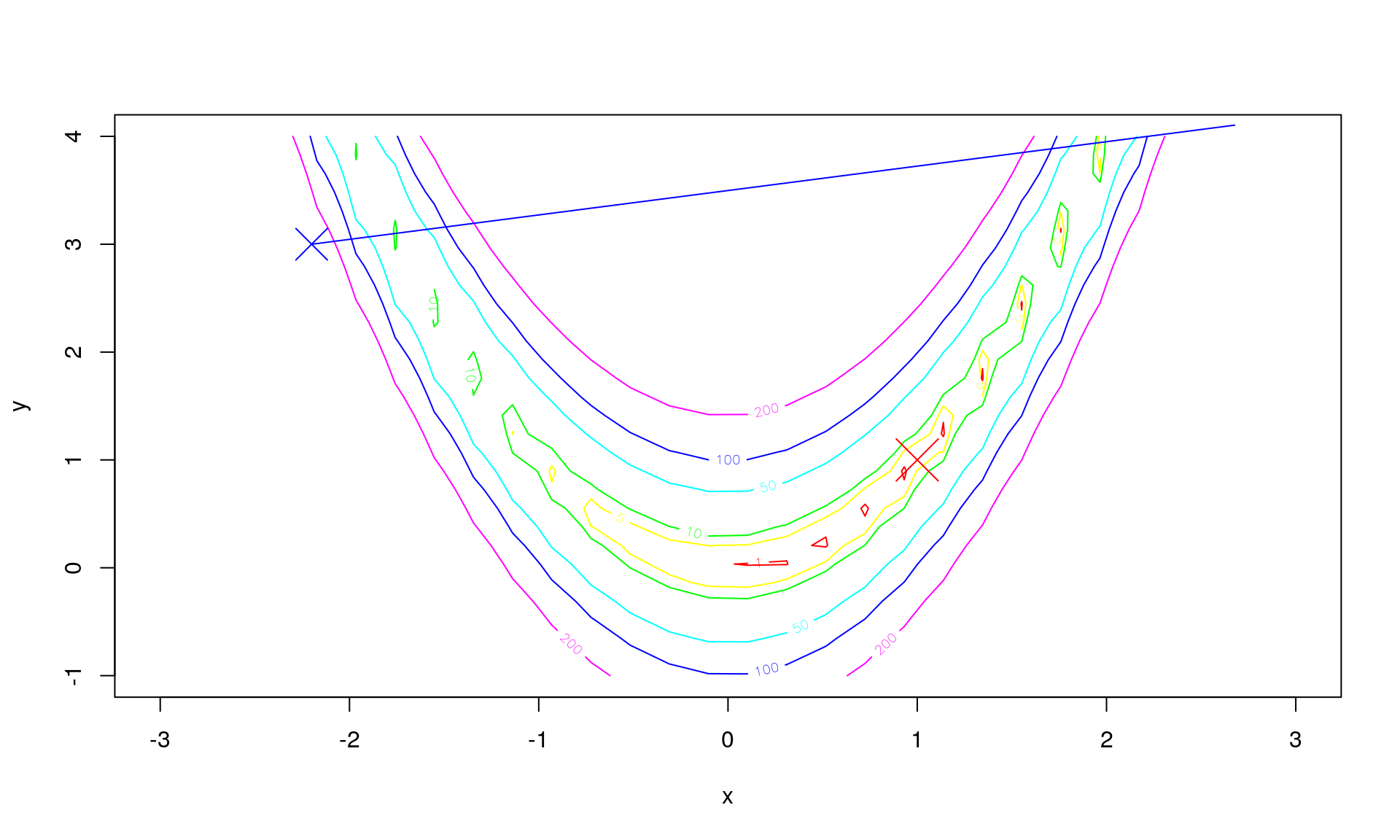

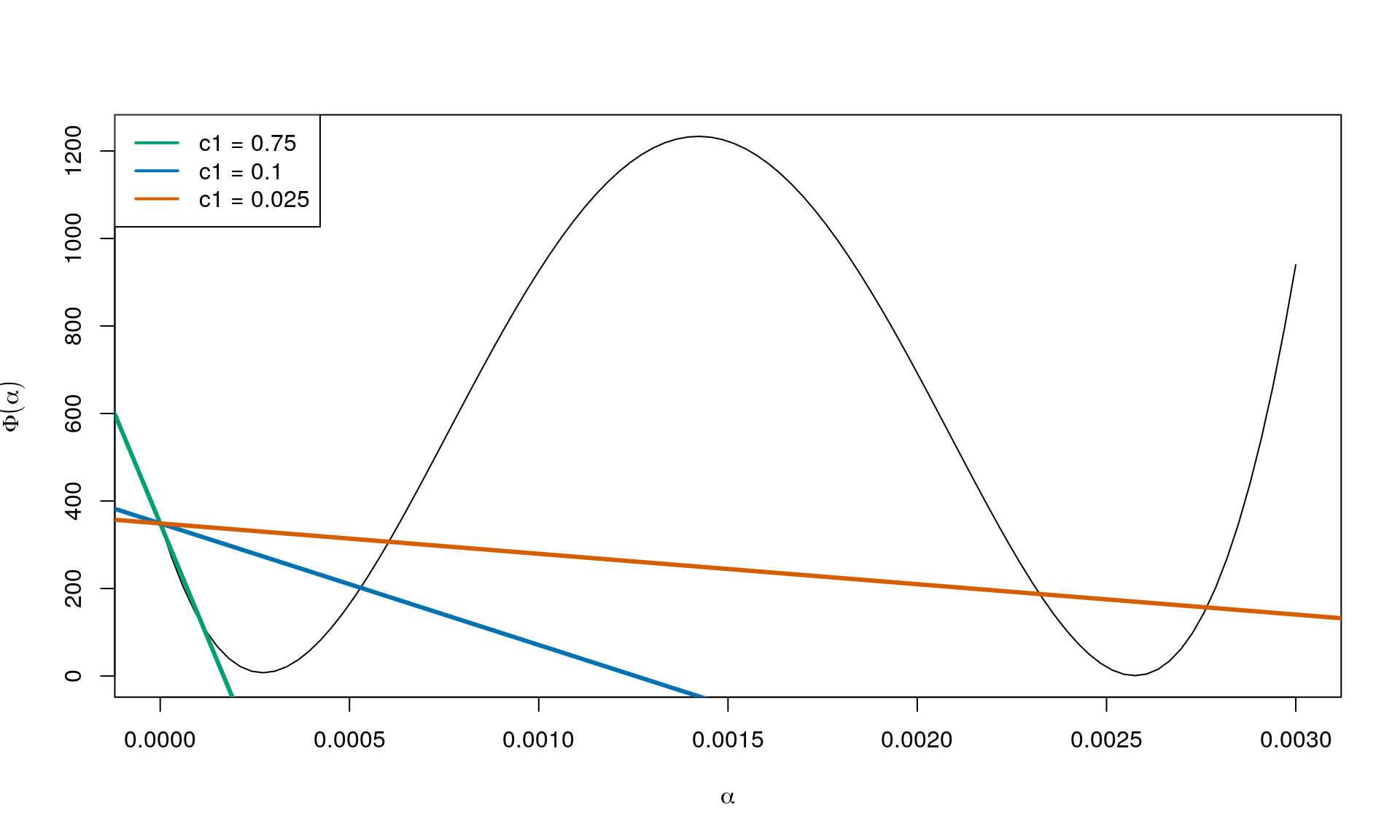

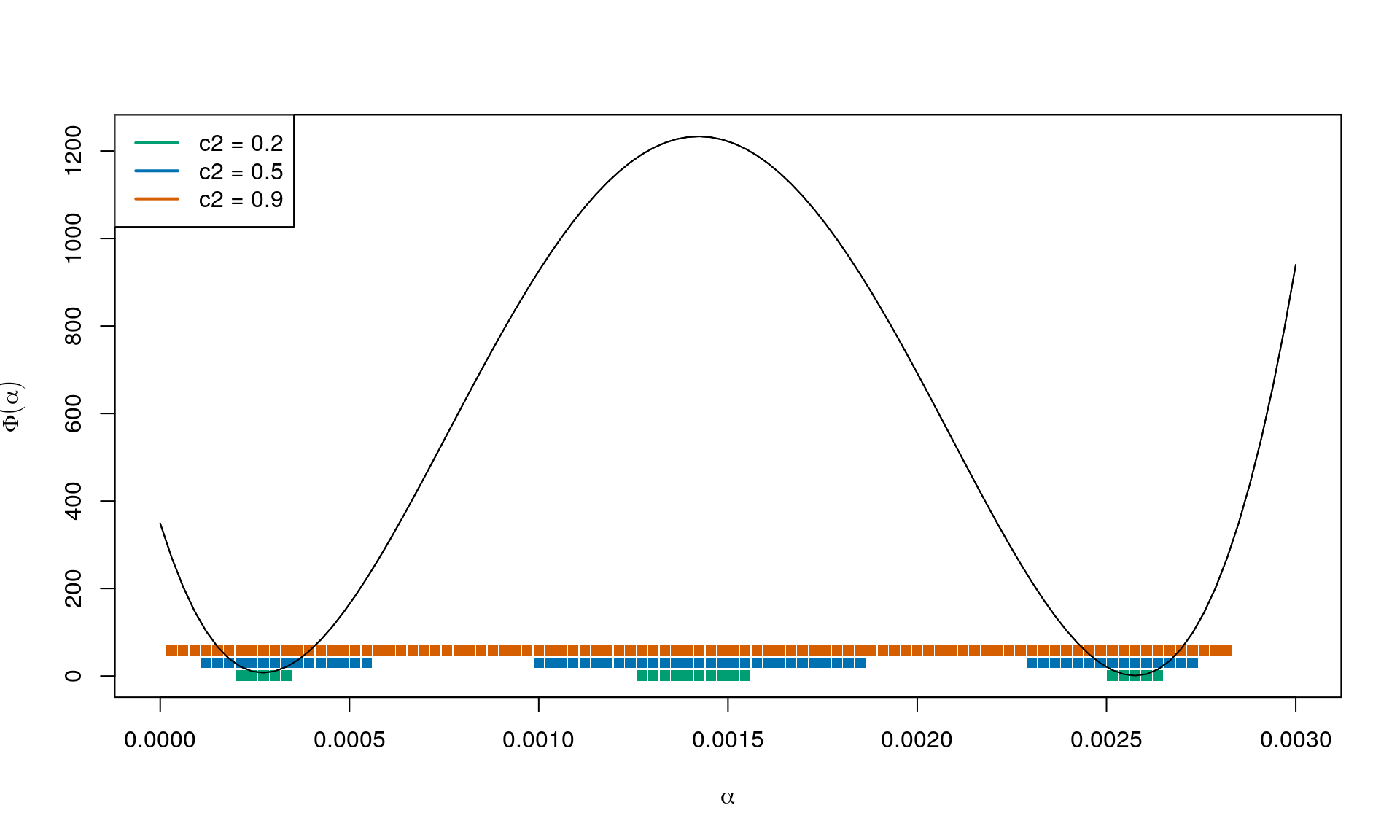

In this case, and (using ). See below for some choices of :

In other words, varying from to gives straight lines from for to for , where the latter corresponds to the tangent of at .

To see this note that at , the tangent to is . This should be the same as the directional derivative of the objective function in the direction of . Which exactly happens for .

So the factor makes it possible to go from the extreme cases with a horizontal line to that of the directional derivative of the objective function in the direction of .

The curvature condition

The curvature condition (CC) is

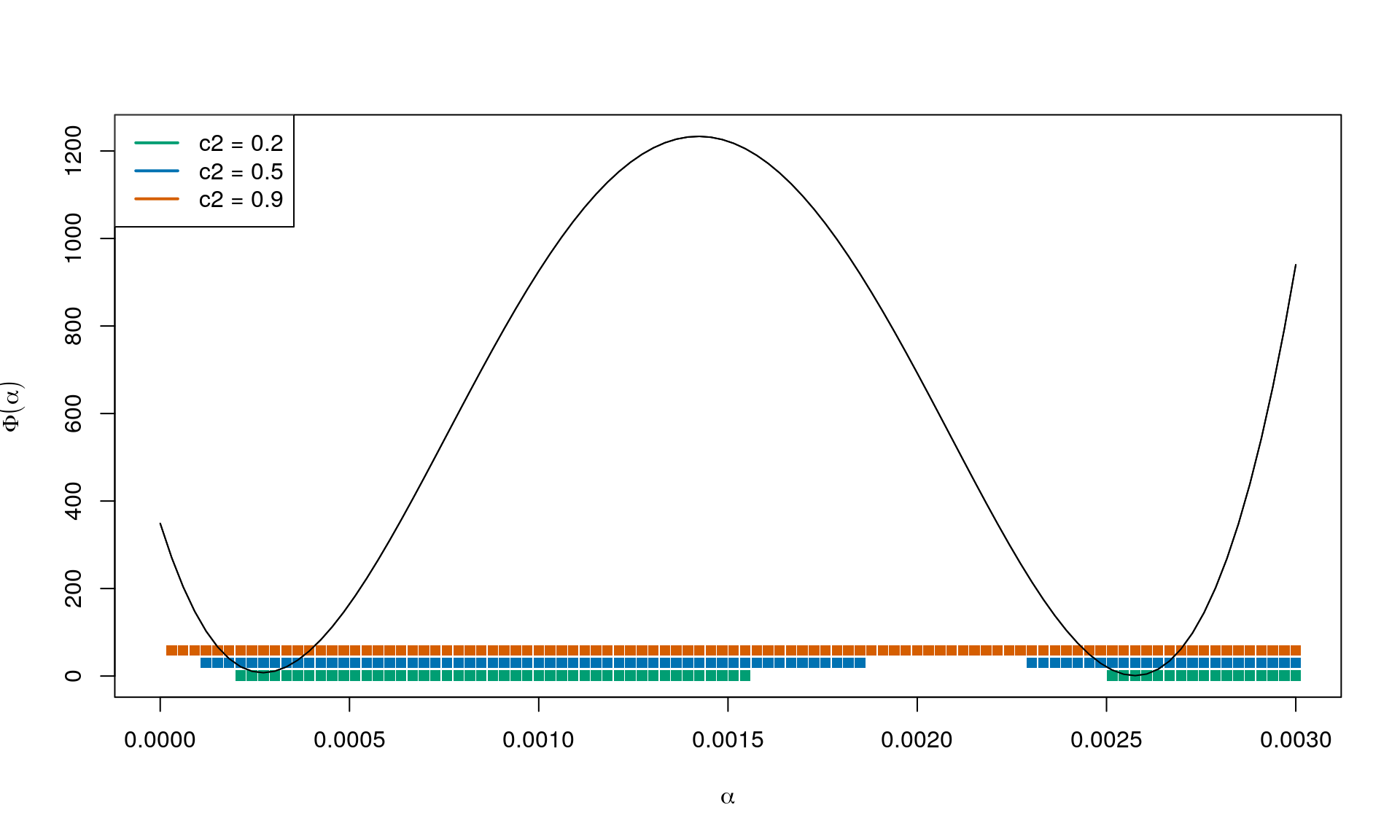

We are in and looking in direction which is a descent direction. We know from before that , so it goes downhill from where we are now. We are looking for a critical point such that . So at the next point we think the gradient should be less negative than it is now; still looking in direction .

So the directional derivative at the next iterate continuing in the same direction as got us here has to be greater than , with controlling the expression to range from 0 to , i.e. the directional derivative where we are standing now ().

Combining

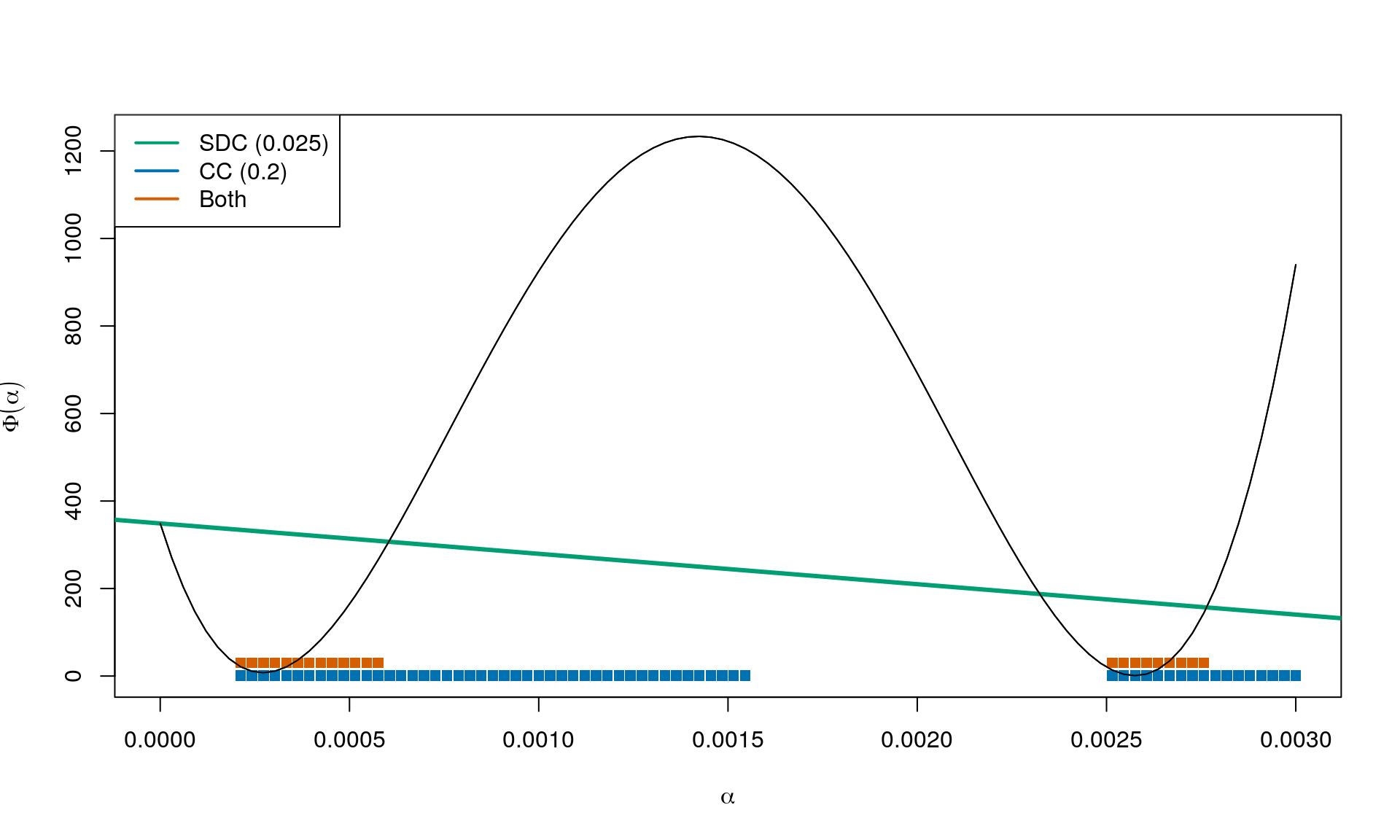

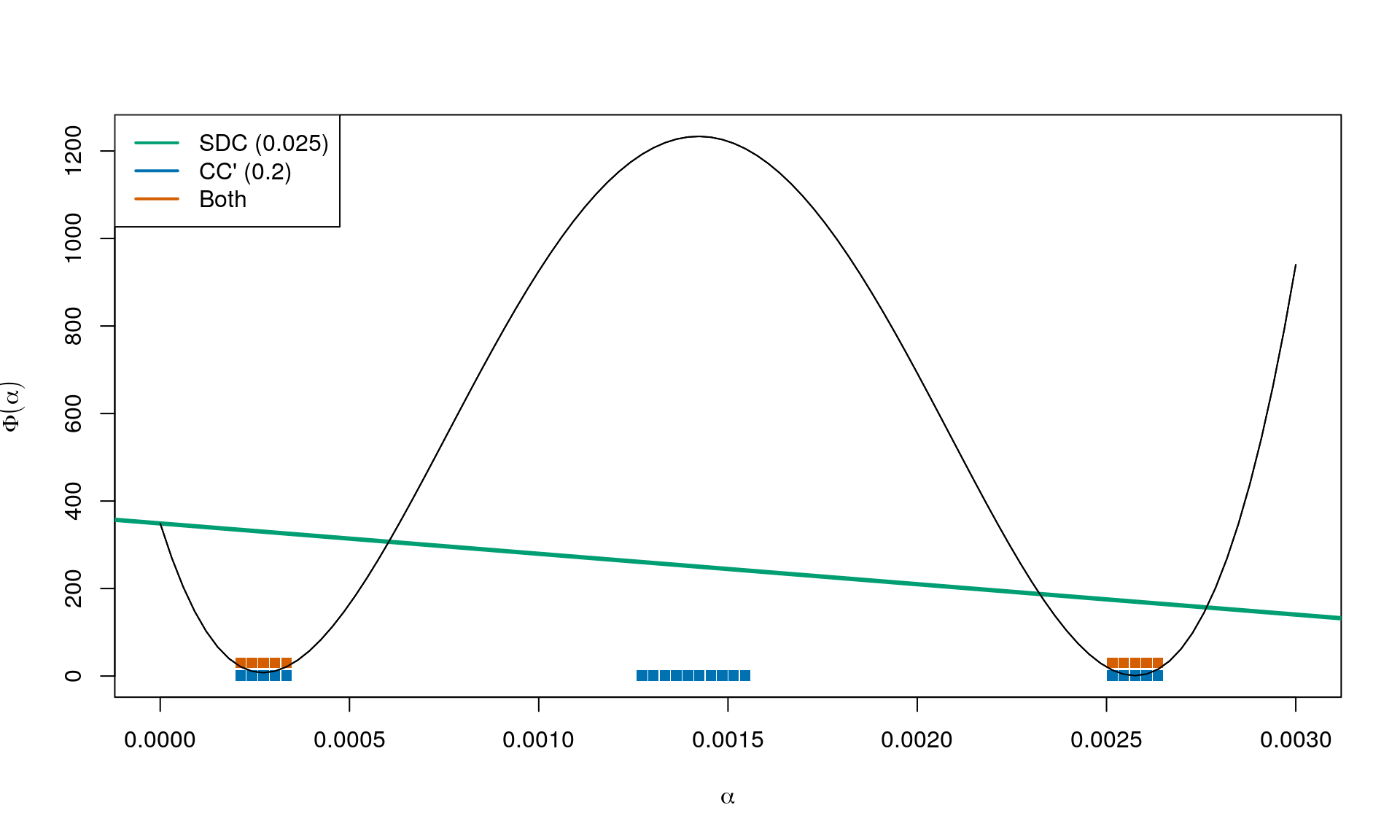

Result for and :

The strong Wolfe conditions

The curvature condition in the strong Wolfe conditions is:

This is very similar to CC, except now the directional derivative at the next iterate continuing in the same direction as got us here cannot be too positive.

Again combining with and we obtain:

Summary

Small values of (close to 0) means that we can go far (limited almost only by a horizontal line). Large values of (close to 1) means that the directional derivative in the next iterate can be almost as negative as it currently is.